Research done in collaboration with Stu Miniman

Premise

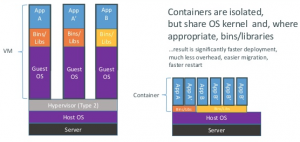

Containers are emerging as the preferred way for application developers to build and run their software anywhere. Docker made an existing technology (LXC) easy to use for developers and a swarm of vendors are embracing the technology, including the leaders and challengers in cloud and enterprise IT. Containers are shaking up the industry as they enable the enterprise to accelerate the modernization of software development. This means enterprises need to become familiar with containers today and start testing and defining the approach to adopting this technology.

Contents

- The Why: Why Containers? Why Now? Why the Enterprise?

- The Now: The Platforms and the Players

- The Conversation: Enterprise Container Opportunities and Challenges

- Final Thoughts

The Why: Why Containers? Why Now? Why Enterprise?

Containers are the latest technology to emerge from the industry’s evolutionary repeat/until true loop. Like all good technologies, what we see today is built on the shoulders of giants because container technology goes all the way back to 1979. It has been used in production systems at Google for about a decade, which is a proving ground for scalability, management and reliability although the wide range of variable enterprise software is not applied there.

For a nice infographic on the history of containers, see Pivotal’s Moments in Container History.

Containers are compared and contrasted to virtual machines and this goes beyond just the technology. Just over ten years ago, virtualization struggled to move from the desktop to the datacenter and experienced significant enterprise headwinds with rejections similar to what containers are experiencing today:

We don’t need it, virtual machines are good enough

Cost of change is too high

Will to change is too low

Ability to change is too hard

Security isn’t good enough

However, just like virtualization, containers have a rapidly growing hard-core of important developers and supporters and today containers are as enterprise-relevant as ever and this will improve in 2015.

A number of companies have played a role in the emergence of containers but perhaps Google and Docker are the highest mountains in the range of contributors.

Google has developed, open sourced and inserted the container tech into the standard linux kernel as well as battle testing it over a long time period in production. However, you needed to be a Linux engineer to make this work.

Docker makes containers easy by solving some gnarly problems and building an ecosystem that makes developers’ lives easier and more productive. That’s a winning combination amongst coders.

Developers lives are made easier, which is important for the businesses that need the applications they run on, because:

- A developer can focus on the app and (mostly) forget about the infrastructure, because containers are about what’s inside of them, not outside of them.

- A developer’s environment and workflows are simpler. The infrastructure tools to build virtual machines to install an OS then install the libraries then install the app are mostly removed, making their environment cleaner (= fast = quality).

- Docker makes the spin up and spin down of instances move from minutes/seconds to milliseconds, making developer workflows like continues integration and testing very fast which in turn increases the pace of iteration which in turn gives the enterprise a productive software team.

- The Dependency Hell Demons are mostly constrained. Whilst the claim of “Write Once; Run Anywhere” is only mostly true, and the demons of Dependency Hell are only mostly banished, if the developer is aware of the limitations these demons can be constrained.

- Containers are becoming ubiquitous so that the Docker tagline of “Build, Ship and Run: Any App, Anwhere” is becoming a reality for containerized applications, and for the enterprise this is the ultimate lock-in killer.

Five strategic reasons for enterprise adoption of containers

Looking at Containers from an Enterprise perspective you can see five strategic reasons to adopt them:

|

Applications evolution |

So called ‘cloud native’, have new architectures like micro-services which is a move away from large-VM / physical server monolithic architectures. Containers are ideal deployment units for these new architectures |

|

Operations improvements |

Cloud providers will offer containers as a service which will be faster to deploy, cheaper to run, and be easier to manage than virtual machines. Enterprises are seeing PaaS products that focus on enterprise-class operations now use Containers as a target. |

|

Distribution efficiency |

As developers lead the charge on use of containers it leads on that ISVs will adopt their use and start to package their applications in containers. This will help them with their SaaS model, and will lead to them also distributing their software in containerised-packages instead of virtual machines. |

|

Platform Focus |

The container technology is of interest in itself, but the operational paradigms building around it are of the most interest to enterprises and service providers. Better (!) operational models (more mature etc), and future potentials (injecting security telemetry) are good |

|

DevOps alignment |

Containers and their management systems are creating glue between developers and operators and are a technological crutch for the burgeoning devops movement. The phrases “you build it; you run it” (Werner) and “well it ran on my laptop” (anon?) are all about the developer having more responsibility to the operations of the application, and containers provide one mechanism to do this. |

Containers are ready. We have seen that containers have a surprisingly long pedigree measured in decades and that the technology has evolved from proven production systems such as Google. Docker are the leader of the pack of platforms and players that are making Containers useful to enterprises. Enterprises have significant reasons to be talking about adopting containers.

The Now: Platforms and Players

Whilst enterprises are at different stages of container adoption, the technology industry is seeing mass adoption with everyone “doing containers” in some way.

Docker is the star of the container movement and everyone is talking about them. In 2014 you couldn’t go to a conference without them being mentioned. This year they plan to make it all even easier and more secure.

CoreOS were a contributor to Docker’s container project but decided to split and do their own Rocket container system due to a different focus.

Red Hat believe in Docker so much that they delayed the release of RHEL 7 to make sure that Docker containers could be supported as a first class citizen (Docker founder Solomon Hykes was on theCUBE at Red Hat Summit – video embedded below). OpenShift, the Red Hat PaaS, also works with Docker.

Microsoft recently announced Nano Server which allows containers to run natively in the Microsoft OS, meaning that you can either run process, or VMs or containers all in the same OS. Linux and Windows containers will not be compatible, but they will both be able to be managed with the same Docker tools. This solution will include Azure cloud and on-premises (with Windows and Hyper-V). Big news.

AWS ECS is in preview of their EC2 Container Service which integrates with Docker.

VMware have the option of letting you run containers in VMs on their hypervisor (and therefore also in the vCloud Air hybrid cloud). Read VMware’s Kit Colbert piece on Containers without Compromise.

Pivotal has integrated with Docker and Rocket via their latest evolution of Pivotal CF (CloudFoundry) with Lattice.

OpenStack is working on integration and IBM, an OpenStack contributor, has announced Enterprise Containers.

Kubernetes is a orchestration and scheduling tool to run clusters of containers. Kubernetes comes from Google who have been doing this the longest, and they are at the stage beyond managing a few containers to managing them at scale. The Cloudcast episode with Kismatic CEO Patrick Reilly gives a good primer on this topic.

Apache Mesos can work with Docker containers and is an application-centric way of applications managing the resources they need across many hosts. Mesos was used at Twitter and is credited to help it scale globally (theCUBE interview with Mesosphere CEO Florian Leibert from OpenStack SV)

The Conversation: Enterprise Opportunities and Challenges

Enterprises IT is going to be disrupted by containers because their software teams will want to use it and their technology partners in the industry, from software vendors to cloud service providers, are also being disrupted by it. Just look at Microsoft’s announcement, one of the biggest enterprise vendors on the planet has adopted their strategy to fit containers.

For the enterprise container conversation it’s useful to look at them in terms of the impact containers have from the perspective of at least five enterprise IT concerns:

1. Impact on Developers

More and more non-IT business, such as car manufacturers, are recognising that they have big software engineering capabilities. They are embracing new software architectures such as microservices, and containers fit well into a microservice architecture and this should further increase the adoption of containers in the enterprise.

For more on Microservices, drink from the source Martin Fowler. For an insight to the challenges, read Testing Strategies also by Martin. Containers require platforms provide the tools to make Microservices achievable because they need the routing and other capabilities that platforms like PaaS systems offer, rather than handcrafting them.

2. Impact on Software Vendors

Whilst enterprises might build some of their software, they also buy software “off the shelf” and now rent more software than ever before via subscription SaaS services.

The software vendors that enterprise buy/rent from are likely to adopt containers as a distribution/deployment unit one past the tipping point of enterprise adoption of containers (similar to the way ISVs only embraced VM Appliances after enterprises standardised on VMs).

As ISVs adopt containers in their development workflows it will be natural to use containers to ship bits (download) and serve bits (as a service), but they could still do “containers without compromise” and ship software in containers in VMs to meet a wider enterprise market.

For software vendors that provide infrastructure software (software defined *), the future is interesting as containers could usurp VMs as the unit of deployment which means that hypervisor demand could decrease which will impact revenues of those vendors. It also means that the whole hypervisor-ecosystem could weaken as the focus on software-defined datacenter moves away from hypervisor and to the application. This is a long term view but worth considering.

3. Impact on Cloud Service Providers

Applications that work in containers don’t necessarily need VMs, unless there are constraints put on the application that must be provided by a VM (e.g. public cloud tenant isolation).

IaaS today is mostly about providing VMs as the unit of deployment for applications, unless you are Google who were the ones using containers in their cloud before everyone else.

Cloud providers are starting to offer containers as a deployment unit but what is the impact to all of the important and difficult IaaS machinery that was built around VMs? Billing units? Monitoring interfaces? Providing a container via an API is only part of the provider story to be checked out.

The other impact on IaaS is to reduce its importance by pointing the developers at a different entrance to the cloud, which is the strategy for Pivotal CloudFoundry and Red Hat OpenShift.

Enterprises might wonder: Why bother with all that VM stuff when what you really want to do is applications? Perhaps all we need is a platform and not infrastructure. The move of Josh McKenty from Piston Cloud, an OpenStack IaaS player, to Pivotal is indicative of this shift of focus.

The portability of applications is also a potential route to cloud brokering and reducing cloud lock-in as your apps are more mobile across cloud service providers. PaaS providers already provide deployment of apps to different clouds, and the emergence of container-anywhere companies such as Tutum is a sign of things to come.

4. Impact on Software Defined Datacenter

Virtual machines delivered benefits, they broke some traditional IT architecture/operations things, and they also provided new ways of doing things.

Virtual machines delivered benefits, they broke some traditional IT architecture/operations things, and they also provided new ways of doing things.

The software defined datacenter is an abstract term to cover all emerging solutions in the network, storage, compute, data, applications, security and management aspects of enterprise IT and the virtual machine and the hypervisor are central to this.

Containers are more application-centric and software-defined than virtual machines and they are also seeing software defined infrastructure concepts embrace them. One of the first is software-defined networking, and Docker’s move to acquire socketplan.io is an indicator of how important this field is.

Enterprises that have software-defined approaches in place will need to make sure they work with containers when considering adoption, and where containers are not reachable by software-defined tech then VMs may need to wrap around them as they (or the hypervisor) are currently the connection point .

5. Impact on Security

Security of container technology to meet enterprise and public cloud standards is an ongoing job. There are workarounds and technologies in place to make this good quality, but some enterprises are waiting for future container versions.

Outside of the container there are changes in the security industry around telemetry, identity and distributed network security that might not work with containers so this is something that needs to be part of the enterprise container conversation up front.

Final Thoughts

Containers are disrupting the industry and will disrupt enterprise IT as they become easier to use and embed into the software and services that the enterprise produces AND consumes. 2015 is likely to see an exponential take up in containers across the industry compared to 2014. It is crucial for enterprises to get onto the front foot and lean-in to containers to start testing them out now as they will cause a change in the enterprise (e.g. skills, operations, workflows) that are historically slow to change due to enterprise complexity. Containers and the ecosystem around them should be considered as a catalyst for change in the enterprise, to remove processes, infrastructure complexity, cloud lock in and speed up innovation.

Action Item: Have the Enterprise Container Conversation in your organization NOW. Improve the education of what containers are, where they are going and learn the impact on your organization. Do you build and/or buy applications? What can go in containers today, and to which target (cloud)? Start using them today and determine what would you foresee the future to be if you could containerise 51% of your applications and create your own tipping point.

See SiliconANGLE.tv for many upcoming events where theCUBE will cover this topic including OpenStack Summit Vancouver, DockerCon, Red Hat Summit, VMworld, AWS re:Invent and more. The Wikibon team welcomes your feedback and input – please share with Steve and Stu your questions and successes.