Hadoop is not going to replace the data warehouse, at least not any time soon. But the open source Big Data framework does pose a threat to the cushy profit margins of data warehouse vendors including, but not limited to, IBM, Teradata, Microsoft and Oracle.

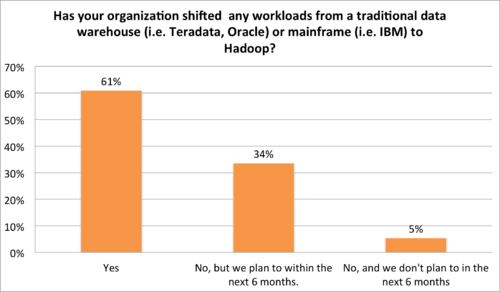

According to data from Wikibon’s recent Big Data survey, more than 60% of Big Data practitioners that have deployed Hadoop have migrated at least one workload from a “traditional” data warehouse or mainframe to Hadoop. Another 34% expect to do so in the next six months. The majority of these workloads involve data transformations – a not unexpected finding. But following not far behind are business intelligence reporting workloads, the bread-and-butter of the enterprise data warehouse.

This trend is expected to continue as Hadoop vendors continue to improve so-called SQL-on-Hadoop offerings. These technologies from vendors such as Cloudera, Hortonworks and Actian allow data scientists and less sophisticated business analysts and business users to query data in Hadoop using ANSI SQL-like tools (some SQL-on-Hadoop offerings have more complete SQL functionality than others) and developers to build Hadoop-based data-driven applications.

Feedback from both the aforementioned Big Data survey and in-depth conversations with Big Data practitioners indicate that Hadoop’s encroachment on the data warehouse’s territory is partly driven by cost. One practitioner at a large national insurance company told Wikibon that his company has frozen its data warehouse spend by shifting workloads to Hadoop and expects to actually decrease its data warehouse bill significantly over the next three-to-five years thanks to Hadoop.

But other important factors are also at work, not least of which is Hadoop’s ability to store and process all manner of data – structured, semi-structured and unstructured.

As Hadoop becomes more real-time and multi-application capable thanks to YARN, this overlap with data warehousing and even high-performance analytic databases will increase. Tresata, a Charlotte, N.C.-based start-up has built real-time fraud detection applications on Hadoop and Spark that are live and in use now at Fortune 500 companies. Such applications were previously the exclusive province of high-performance databases such as Netezza, if they were possible at all.

At Hadoop Summit 2014 in early June, all the major data warehouse vendors were in attendance. Each is trying to contain the threat posed by Hadoop. Some couch their language in opportunity, and indeed, Hadoop will deliver more data under management at most enterprises and some of that data will work its way to the data warehouse. Vendors such as Teradata are building reference architectures that include Hadoop and developing tools that make it easier to ingest Hadoop data into more costly data warehouses for higher-value analytic workloads. This is a valid approach.

Others, such as IBM, are also counting on their high-margin professional services and up-the-stack analytics software to mitigate the threat Hadoop poses to data warehouse revenue.

And most, if not all, of the data warehouse vendors point to Hadoop’s relative immaturity to dissuade practitioners from building mission-critical applications on the open source framework. Some of these criticisms have merit, as Hadoop’s security, performance and data management capabilities are still lacking around particular use cases.

Action Item: The reality is that Hadoop will replace the data warehouse for specific workloads for which it is best suited, but not for everything. As a result, Hadoop vendors and data warehouse vendors are indeed competing for some of the same dollars, and this competition will only increase as Hadoop matures. Data warehouse vendors should accept the ascendance of Hadoop as a first-class citizen in the data center and position their products and services as value-add components that help practitioners turn raw data stored in Hadoop into actionable insights on executives’ desks.