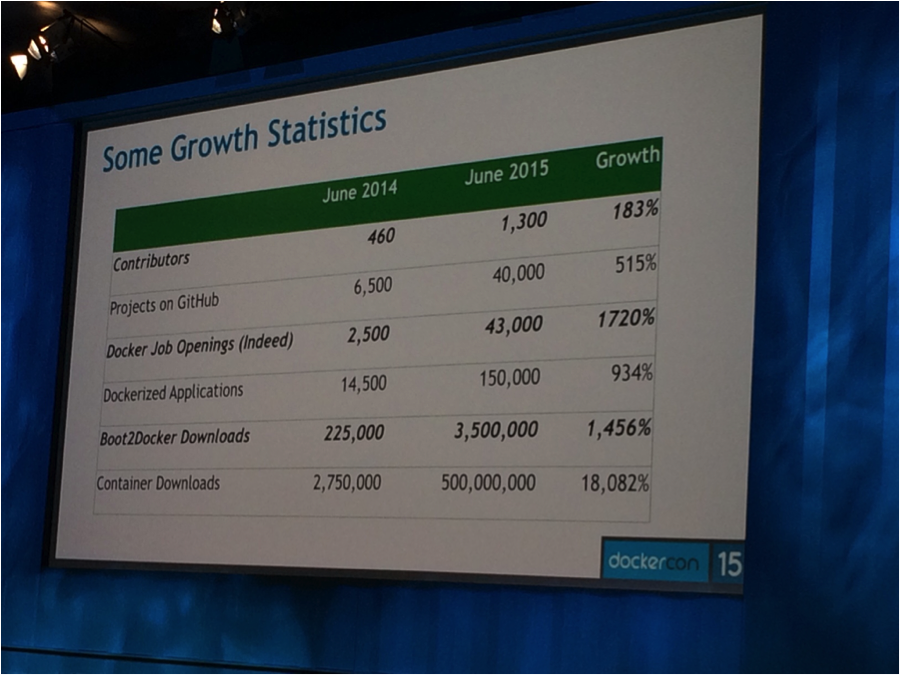

“The growth rate of Docker has gone beyond ludicrous, it’s now plaid. 18,000%, that’s not a usual percentage to put on a graph.” – Adrian Cockcroft, on theCUBE, speaking of Docker CEO Ben Golub’s keynote presentation at DockerCon 2015 in San Francisco.

Premise: If containers are at the center of a shift in how applications are developers and delivered, and their pace of growth and change is unprecedented in IT history, this could have a massive ripple effect on both suppliers and consumers of the ecosystem of IT technologies.

[Below: Adrian Cockcroft on theCUBE at DockerCon 2015]

Most packaged, commercial software ships every 12-18 months, such as VMware vSphere or Oracle or SAP. Some of the larger open source projects, such as OpenStack, have a release cadence of every 6 months. But after announcements from several events last month (DockerCon, Red Hat Summit and Monitorama), it’s becoming clearer that the pace of software releases may begin to accelerate to just a couple months. And this means that Wikibon and others will need to revisit analysis and update findings more frequently as well – for example, Time for the Enterprise Container Conversation was written just two months ago, and some aspects of it need to be reviewed and updated.

In that report, Wikibon examined three key areas:

- The Why: Why Containers? Why Now? Why the Enterprise?

- The Now: The Platforms and the Players

- The Conversation: Enterprise Container Opportunities and Challenges

Let’s explore how the announcements from these Summer 2015 events may have validated or changed the initial assessment of the Enterprise Container Conversation.

[Re-Examining] Why Containers? Why Now? Why the Enterprise?

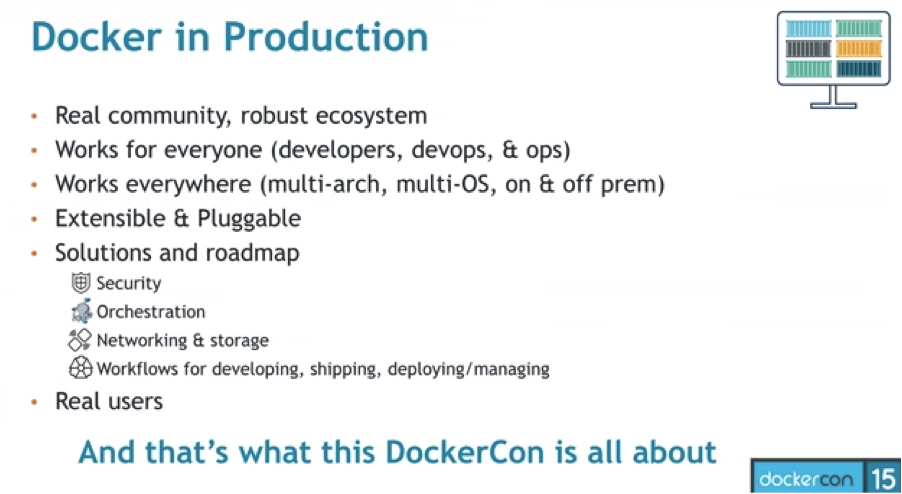

The theme of DockerCon 2015 was “Running Docker in Production”, and attendance was announced at 2000-2500 people, almost 5x from the year before. During Red Hat Summit, Red Hat Executive Vice President Paul Cormier made a case that it should be, “THE container company.” At both events, large Enterprise companies such as IBM, Microsoft, EMC, VMware, Cisco, HP, AWS and others had a large presence and were trying to align their portfolio to this emerging technology and community. Without question, Enterprise IT vendors are now on-board with Docker and containers. As Battery Ventures VC Adrian Cockcroft said, “Docker wasn’t on anybody’s roadmap in 2014, but it’s on everybody’s roadmap in 2015.”

There is no doubt that containers have the attention of the mainstream media and the vendor community, but the question remains if Docker has moved into mainstream customer usage yet. This is an interesting question to unpack, because customer testimonials trail the community numbers. For example, Docker CEO Ben Golub stated that downloads of Docker had grown from 3M in 2014 to over 500M in 2015.

These are astounding growth rates by any measure of technology growth cycles. Now the question to explore is how many actual customers this translates into and how are those customers using Docker technology. Several customers were highlighted during the week, either in keynotes or breakout sessions – GSA + Booz Allen, Business Insider, Capital One, GE, GrubHub, New York Times, Nerdalize, Orbitz, and PayPal. While they are on the bleeding edge, they begin to show a cross-section of the types of use-cases and industries that see value in containers and microservices application architectures.

Are these customers using Docker in external-facing production, or internal-facing production?

Will this eventually translate into broad market adoption and revenues, or is Docker the friction-less infrastructure equivalent to the SaaS “freemium” model, where 1-5% of customers are monetized?

From an Enterprise perspective, Docker has three things working in its favor:

- It appeals directly to developers, which means it has closer linkage to business decision-makers than some of the other hot trends at the moment – example: software-defined networking or software-defined storage.

- It is directly aligned to the concepts of Cloud Native applications, DevOps and Microservices, which appeal to both development and operations teams that are trying to determine the best ways to build and operate the next generation of applications for Cloud, Mobile and Big Data.

- It solves some of the cloud portability issues that virtual machines have not solved, which means it will appeal to leading CIO desires to build Hybrid Cloud

[Re-Examining] The Now: The Platforms and the Players – New Announcements

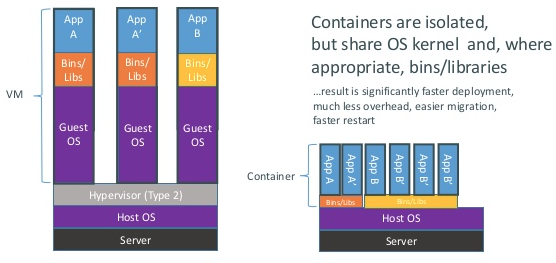

For the past year, the focus of Docker (and containers) was in the context of how it differed from the well-known model of using virtual machines to build data center applications. During DockerCon 2015, that conversation significantly expanded in a number of ways.

The New Container Standard – “runC”

In late 2014, the nascent container industry was thrown a curveball when CoreOS introduced an alternative container runtime format to Docker, called “rkt” (pronounced “rocket”). This fragmentation caused some concern from the industry about how this would impact adoption rates. Some of these concerns will be reduced as a new, open-source standard for container runtime was announced – called “runC”. runC was endorsed by most of the major technology vendors, including both Docker and CoreOS, and it will include contributions from the Docker, rkt and appc maintainers.

This is not only a win for the industry at large, but also a win for Docker. This means that customers and technology companies will be able to build against a single, open standard. And it means that Docker will be able to avoid a loss of momentum around containers due to fragmentation of low-level “plumbing” technology.

Robust Container Networking

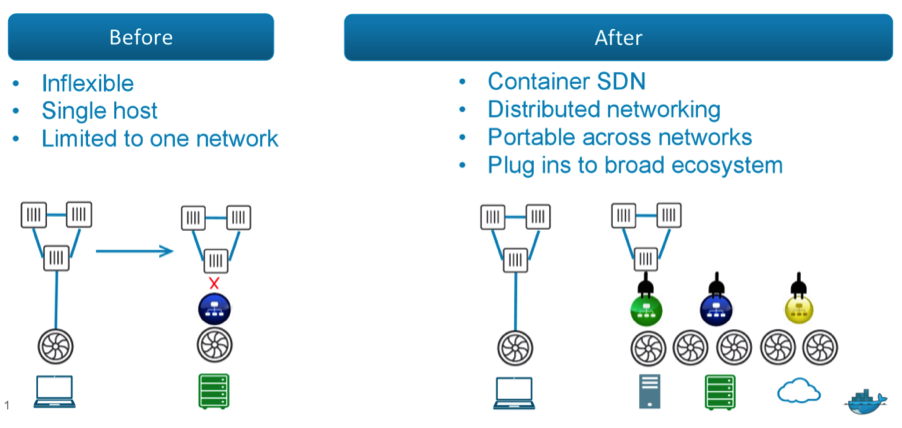

When virtualization was beginning to gain popularity around 2006-2007, one of the biggest barriers to adoption was networking. Adding virtualized resources within a physical server moves the traditional points of demarcation from an Ethernet port (run by the networking team) to a virtual switch or virtual port (run by the virtualization team). While this challenge has mostly been solved for virtual machines, the problem has re-emerged for container-centric deployments. Not only do containers move the demarcation point, but also they add two additional levels of complexity:

- The lifespan of a container is variable, so it might exist for just a few seconds or it could run long-lived jobs and applications. When variability occurs in networking, complex (and often bad) things tend to happen.

- Following the existing best practice guidelines, many companies are running a single application (or microservice) per container. This means that we’re most likely to see the number of containers dwarf the number of virtual machines. Managing these large numbers of containers adds another level of complexity to the operations, especially networking (MAC tables, IP Addressing, DNS Naming)

While SDN offerings are beginning to add initial functionality for containers, Docker did not have a native solution until their acquisition of Socketplane. At DockerCon, the work of the Socketplane team was re-announced as “Docker Networking”, or technically the “libnetwork” functionality that is native within Docker. This brings native, distributed SDN to Docker container environments.

While SDN offerings are beginning to add initial functionality for containers, Docker did not have a native solution until their acquisition of Socketplane. At DockerCon, the work of the Socketplane team was re-announced as “Docker Networking”, or technically the “libnetwork” functionality that is native within Docker. This brings native, distributed SDN to Docker container environments.

For networking professionals, it will be important to not only follow this space, but also the advancements around Service Discovery frameworks (eg. Docker Swarm, Hashicorp Consul, CoreOS etcd) and the Scheduling frameworks (eg. Mesos, Kubernetes). All of these will be working in conjunction to manage the underlying network connectivity and routing, but also the application discovery mechanisms needed to manage large-scale, automated environments.

Data Persistence joins Container-Centric Applications

In the past, most container conversations have been focused on modern applications rather than traditional or legacy applications. Given that containers tend to be ephemeral, they best aligned to the stateless portions of applications. Often times, this aligned to the principles of 12 Factor Apps. But as containers begin to push towards more mainstream audiences, there will always be questions about how to deal with either legacy applications, or consistent operations between legacy and modern environments. This is where data persistence comes into the picture.

Until recently, the data persistence discussion was kept outside of the domain of containers. While containers would attach a volume to a persistent device (eg. mount an NFS volume/directory), the ability to maintain the association when the contain stopped/died was not possible or was overly complicated. At DockerCon, a new focus area emerged – data persistence. Both ClusterHQ and EMC were focused on ways to bring Data Persistence to both the storage plugin framework (via “Project Flocker”) and natively within Docker (via “Project RexRay”). The two companies showed how this could work with open-source storage, public cloud storage and several EMC products (see theCUBE interview with ClusterHQ CEO Mark Davis and EMC VP of Advanced R&D Ken Durazzo).

Data Persistence has been a frequently discussed topic at several events this year, including Cloud Foundry Summit and VelocityConf, where projects are underway to add data persistence to Cloud Foundry and container scheduling projects like Mesos and Kubernetes. As more companies look to move towards container-centric application deployments, the topic of data persistence will be increasingly important.

Extensibility via Plugins

Many people like to position Docker as the anti-VMware company, looking to move companies from using virtual machines to containers. While the container discussion is much more complex and nuanced than that, Docker did take a page out of the VMware playbook by creating an ecosystem-expansion approach to working with partners by introducing the concept of “plugins”. Plugins allow Docker to maintain influence over the core architecture, while allowing 3rd-party companies or ISVs to add extensible functionality. Networking and Storage were the first two areas that were highlighted. WeaveWorks and Flocker (partnering with EMC) were both highlighted in the early announcements. There was also discussion that networking companies such as Cisco, Microsoft, Midokura, Nuage Networks and VMware are already working with Docker to extend the new plugin architectures to work with their SDN offerings.

This is positive news for customers considering Docker, because it ensures that Docker will not be a closed platform, and ensures that there will be opportunities to continue using existing investments in infrastructures as they deploy network container-based applications.

Added Focus on Security

As the grow of Docker dominated the headlines, so too did a parallel storyline – “Is Docker secure?” Not only did CoreOS “rkt” question the security architecture of Docker, but companies such as VMware questioned if containers should ever be run without the presence of a virtual machine. Many security professionals have questioned if Docker could be run without having to give away ROOT privileges to the containers.

While no system is every secure, Docker made security a first-class citizen at DockerCon by including it in the Day 1 keynote. The Docker Security team, led by Diogo Monica, demonstrated the new “Docker Notary” security framework. They also highlighted several new Best Practices and Tools that will help security teams that will have to maintain container environments.

- Docker Notary

- Understanding Docker Security Best Practices

- Docker Bench Security (automate the security checks)

By having native Docker security tools and frameworks, plugins from security startups such as Twistlock, and VMware security projects such as Photon and Lightwave, should help Enterprise IT organizations begin to see how to mitigate security concerns that may have previously existed when using Docker for production applications.

Portability Across Clouds (and Operating Systems)

As more companies look to build Hybrid Cloud environments for their IT needs, application portability becomes one of the highest priority requirements. In theory, Docker helps solve the portability challenge from desktop to any cloud. This reality was reinforced at DockerCon with GA announcements of public cloud container services and enhancements from industry leaders AWS, Google, Microsoft and VMware vCloud Air. This adds to existing support from cloud providers Digital Ocean, Rackspace, Tutum Cloud, Cloud 66 and others.

But it wasn’t just multiple cloud providers that added to the portability discussion. Microsoft had a major presence at the event, demoing how applications can be ubiquitously deployed to Docker containers running on either Linux or Windows (see video of Mark Russinovich (CTO, Microsoft Azure) demoing Docker on Windows and Linux).

This ubiquity of container portability will play a significant role in helping companies build Hybrid Cloud environments, especially for modern applications. While it won’t necessarily be the appropriate choice for legacy applications, it does begin to give CIOs a distinct path for how to think about Hybrid Cloud architectures. By combining this with commercial PaaS offerings that embrace containers, such as Cloud Foundry and Red Hat OpenShift, the possibility of Hybrid Cloud in 2015 is becoming more of a reality.

[Re-Examining] The Conversation: Enterprise Container Opportunities and Challenges

The Docker ecosystem, which is still the dominant player in the container marketplace, is growing and moving rapidly. At DockerCon, they took some significant steps towards building the framework that more IT organizations will need to run containers in production. Along with Docker, most of the major vendors aligned behind the technology to signify that it will be part of their roadmaps in 2H 2015 and beyond.

Docker and containers still have a ways to go before their will cross the chasm and become mainstream for a large portion of Enterprise customers. There are still significant skill gaps needed to operate these new environments, and the number of developers able to create Cloud Native application is still in the minority. Many of the container technologies debuted at over the past few weeks are still experimental or in v1.0 stages of deployment into the market. But as can be seen by the attendance of events in June, interest in containers is very strong. Early adopters will continue to find interesting new ways to use the technology, with Docker and others hoping that they will contribute their learnings (and code) back to the community.

Action Item: As stated in the previous report, containers will create significant disruption to several existing technology segments in the market – virtual machines, configuration management (CI/CD, Automation), Hybrid Cloud, PaaS and modern applications. If your IT organization has projects involving these areas, the time is NOW to begin exploring the technology and build the skills necessary to consider then in your projects.

See full coverage from DockerCon on SiliconANGLE.tv